In today’s world, effective data analytics is a critical factor for decision-making in businesses of all sizes. Building scalable data models is essential to handling vast amounts of data efficiently, and Snowflake has emerged as a popular platform to address these needs. For professionals exploring advanced skills in data handling, a data analyst course or data analytics course in Mumbai can be a valuable starting point for understanding such technologies. This article delves into the methods, features, and best practices for building scalable data models with Snowflake, highlighting how it helps businesses achieve better analytics outcomes.

Why Choose Snowflake for Data Modeling?

It is a cloud-based data platform known for its flexibility, performance, and ease of use. Unlike traditional data warehouses, it offers a highly scalable architecture that separates computing and storage, allowing users to scale resources independently. That means you can allocate resources based on the workload, ensuring cost efficiency and faster query performance.

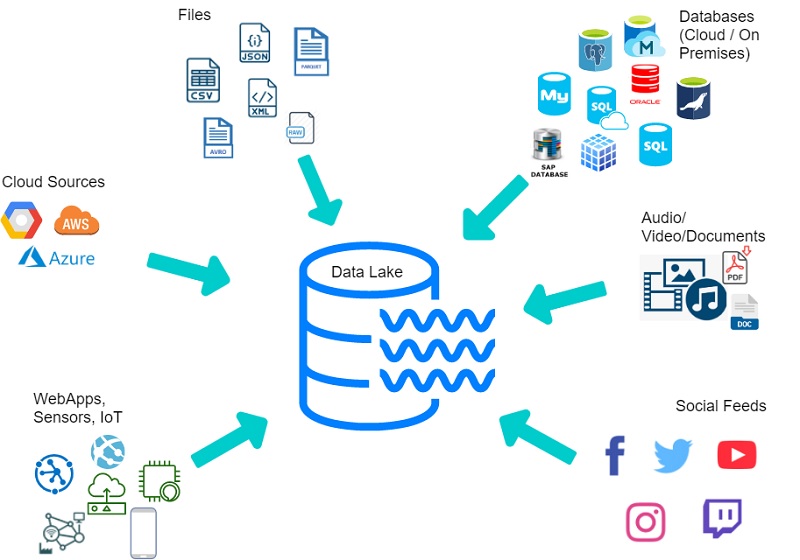

Another critical benefit of Snowflake is its support for diverse data types, including structured, semi-structured, and unstructured data. This flexibility makes it an excellent choice for modern businesses that deal with a variety of data formats. Snowflake also provides robust security, built-in data-sharing capabilities, and support for third-party integrations.

Key Features Supporting Scalable Data Models

Snowflake’s primary qualities must be used to develop scalable data models efficiently. Here are some key features that support scalable data models:

1. Multi-Cluster Warehouses

Snowflake’s multi-cluster compute architecture allows for seamless scaling during peak workloads. When demand increases, additional clusters automatically activate to maintain query performance. Once the workload reduces, these clusters are deactivated, optimizing resource usage and reducing costs.

2. Data Cloning

Snowflake’s zero-copy cloning enables developers to create copies of data without physically duplicating them. This feature is handy for testing, development, and analytics, as it reduces storage costs and accelerates workflows.

3. Time Travel

Snowflake’s time travel feature allows users to access historical data for a specified retention period. That is useful for recovering accidentally deleted data, running historical analyses, or tracking changes over time.

4. Data Sharing

With Snowflake, you can securely share data across teams, departments, or even external organizations without moving or copying it. That simplifies collaboration and ensures consistent data across all stakeholders.

5. Support for Semi-Structured Data

Snowflake’s ability to process semi-structured data, such as JSON, Parquet, and Avro, makes it a powerful choice for organizations with diverse data formats. It automatically parses and stores these formats in a columnar format, enabling efficient querying.

Steps to Build Scalable Data Models with Snowflake

Building scalable data models on Snowflake involves several steps to ensure data integrity, performance, and cost efficiency. Here’s a step-by-step guide:

Step 1: Understand the Business Requirements

Before creating a data model, it is crucial to understand the business objectives, key metrics, and data sources. Identify the type of analyses the model will support, such as sales forecasting, customer segmentation, or operational reporting.

Step 2: Define the Data Architecture

Snowflake allows users to design their architecture based on the organization’s needs. Users start by categorizing data into layers, such as raw, staging, and analytics-ready data. This layered approach ensures data quality and traceability.

Step 3: Optimize Table Design

For efficient querying, design tables that minimize redundancy and improve accessibility. Snowflake supports both star schema and snowflake schema, widely used in analytics. Select the schema that best aligns with your performance requirements and data relationships.

Step 4: Implement Partitioning and Clustering

Partitioning and clustering help in optimizing query performance by logically grouping data. Snowflake automatically manages partitioning based on its internal micro-partitioning architecture. However, you can also define clustering keys to improve query performance for specific use cases.

Step 5: Leverage Virtual Warehouses

Use separate virtual warehouses for different workloads, such as data loading, transformation, and reporting. That prevents resource contention and ensures consistent performance for all operations.

Step 6: Monitor and Optimize Queries

Snowflake provides tools like Query Profile and Resource Monitors to track query performance and resource usage. Monitor these metrics regularly to identify bottlenecks and optimize queries for better performance.

Best Practices for Scalability

While Snowflake provides robust tools for building scalable data models, following best practices ensures long-term success:

- Automate Data Pipelines: Use tools like Snowpipe for continuous data ingestion and automate transformation workflows to maintain consistent data freshness.

- Minimize Data Duplication: Leverage Snowflake’s cloning feature to avoid unnecessary data copies, reducing storage costs.

- Secure Data Access: To maintain security and compliance, implement role-based access control (RBAC) and encrypt sensitive data.

- Regularly Purge Unused Data: Use retention policies to delete outdated data, optimize storage usage, and reduce costs.

Real-World Applications

Many organizations across industries rely on Snowflake to build scalable data models for analytics. For example:

- Retail: A retail chain can use Snowflake to integrate point-of-sale data with inventory and customer demographics, enabling real-time inventory tracking and personalized marketing.

- Healthcare: Hospitals and clinics can analyze patient records and operational data to improve resource allocation and treatment outcomes.

- Finance: Financial institutions can leverage Snowflake to detect fraudulent activities by analyzing transaction patterns in near real-time.

Preparing for the Future with Data Science

Skilled professionals who understand data modeling and analytics are essential to maximize Snowflake’s capabilities. Specialized training can provide a strong foundation in these areas. For instance, enrolling in a data analyst course can equip you with the skills to work with platforms like Snowflake and develop advanced analytical models.

Conclusion

Building scalable data models is a cornerstone of effective data analytics, and Snowflake provides the tools and features to achieve this at scale. Organizations can optimize their data workflows and gain valuable insights by following the outlined steps and best practices. Whether you’re an aspiring data scientist or a business leader, understanding Snowflake’s capabilities is crucial for leveraging data to its full potential.

Investing in the correct courses is just as important. Enrolling in a data analyst course or data analytics course in Mumbai will help you stay on top in the competitive world of data analytics by preparing you for the possibility of creating strong and scalable data solutions.

Business Name: Data Science, Data Analyst and Business Analyst Course in Mumbai

Address: 1304, 13th floor, A wing, Dev Corpora, Cadbury junction, Eastern Express Highway, Thane, Mumbai, Maharashtra 400601 Phone: 095132 58922